ChatGPT may alert police when teens discuss suicide plans, says OpenAI

2025-09-30 14:28:09

newYou can now listen to Fox News!

Chatgpt can alert the police soon when teenagers discuss suicide. Openai CEO and co -founder Sam Altman revealed the change during a recent interview. ChatGPT, Chatbot, has become widely used artificial intelligence that can answer questions and make conversations, a daily tool for millions. His comments indicate a major shift in how to deal with the artificial intelligence company with mental health crises.

Subscribe to the free Cyberguy report

Get the best technical advice and urgent safety alerts and exclusive deals that are connected directly to your in inventive box. In addition, you will get immediate access to the ultimate survival guide – for free when you join my country Cyberguy.com/newsledter

Sam Al -Tamman, CEO of Openai Inc. (Nathan Howard/Bloomberg via Getti Emaiz)

Why Openai think about police alerts

Al -Taman said: “It is very reasonable for us to say in the cases of young people who talk about suicide, seriously, as we cannot communicate with the parents, we call on the authorities.”

To date, Chatgpt’s response to suicide ideas has indicated hot lines. This new policy indicates the transition from negative suggestions to active intervention.

Altman admitted that the change comes at a cost of privacy. He stressed that the user data is important, but he admitted that preventing the tragedy must come first.

Adolescents can easily access Chatgpt on a mobile device. (Jaap Arriens/Nurphoto via Getty Images)

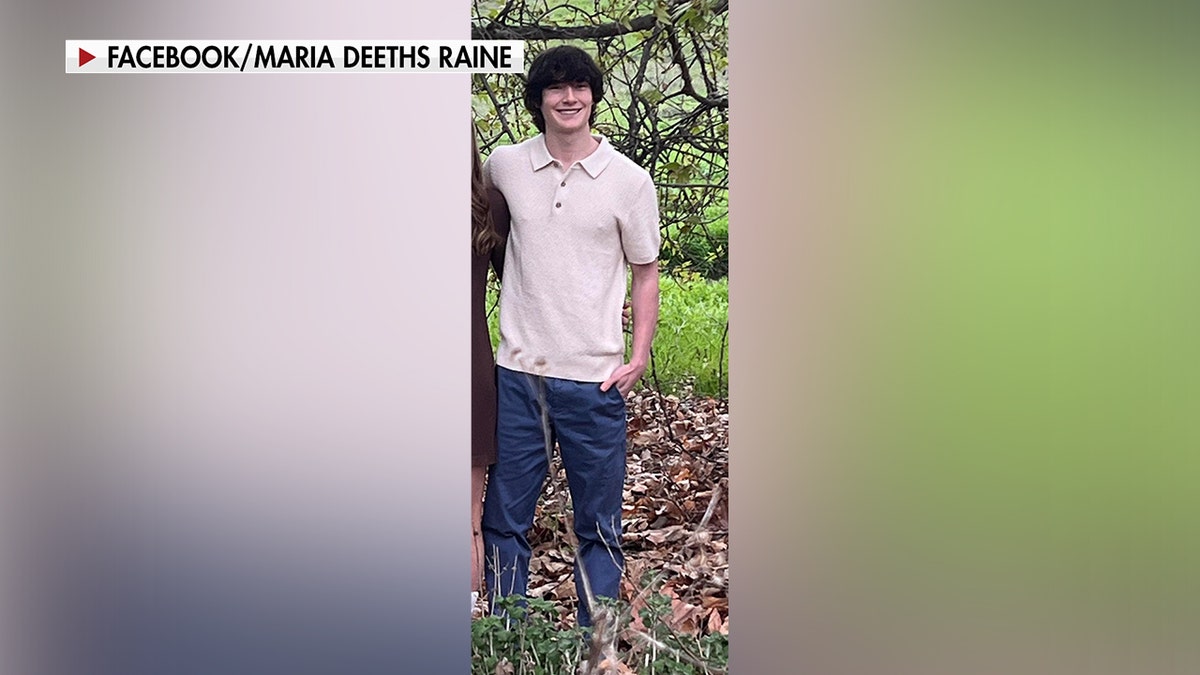

The tragedies that paid work

The transformation follows the associated cases Adolescent suicides. The most famous issue includes Adam Rin from 16 -year -old California. His family claims that you have seen a “Playbook step by step” for suicide, including instructions to connect the gallows and even formulate a note.

After Ren’s death in April, his parents filed a lawsuit against Openai. They argued that the company failed to prevent artificial intelligence from directing their son to harm.

Another lawsuit has accused Chatbot competition. According to a 14 -year -old child, his private life was taken after an intensive communication with a robot similar to a TV personality. Together, these situations highlight the speed of adolescents Unhealthy bonds With artificial intelligence.

Adam Rin, a teenager in California, took his life in April 2025, amid the demands of his trained (Ren’s family)

How common is the problem?

Altman referred to global numbers to justify stronger measures. He pointed out that about 15,000 people take their lives every week around the world. With the use of 10 % of the world Chatgpt, about 1500 people committed suicide may interact with Chatbot Weekly.

Search supports concerns about relying on adolescence on artificial intelligence. Proper logical media poll found that 72 % of adolescents use artificial intelligence tools, as one in eight searches for mental health support.

Openai’s plan for 120 days

In a blog post, identify Openai steps to enhance protection. The company said it will lose:

- Expanding interventions for people in the crisis.

- Make easy access to emergency services.

- Enabling communications on reliable contacts.

- Offering stronger guarantees for young people.

To direct these efforts, Openai created an expert council for welfare and AI. This group includes specialists in developing youth, mental health and interaction between humans and computers. Besides, Openai works with a global doctor network of more than 250 doctors in 60 countries.

These experts help to design parental control and safety guidelines. Their role is to ensure the compatibility of artificial intelligence responses with the latest mental health research.

A teenager uses ChatGPT. (Frank Romanhour’s alliance/photos via Getti Emiez)

New protection for families

Within weeks, parents will be able to:

- Connect their Chatgpt account with teenagers.

- Adjust the form of the model to match the appropriate rules for age.

- Disable features such as memory and chat date.

- Get alerts if the acute narrow system is discovered.

These alerts are designed to notify parents early. However, Altman admitted that when the parents are unconnected, the police may become a reserve option.

Chatgpt can be used by teenagers to complete homework. (Cyberguy “Knutsson)

The boundaries of artificial intelligence guarantees

Openai admits can weaken their guarantees over time. While short chats often redirects users to hot crises, long conversations can erode compact protection. this The “safety deterioration” has already led to cases in which teenagers received unsafe advice after extending use.

Experts warn that relying on artificial intelligence on mental health can be risky. Chatgpt has been trained in human sound but professional therapy cannot be replaced. Anxiety is that weak adolescents may not know the difference.

Steps that parents can take now

Parents should not wait for new features. Here are immediate ways to maintain the safety of teenagers:

1) Starting regular talks

Ask open questions about school, friendships and feelings. Honest dialogue reduces the chance that adolescents only turn to Amnesty International for answers.

2) Set the digital boundaries

Use Parental controls On devices and applications. Reducing access to artificial intelligence tools late at night when adolescents feel more isolated.

3) Correction accounts when available

Take advantage of the new Openai features that link parents and adolescents to the close censorship

4) Encouraging professional support

Promote that mental health care is available through doctors, consultants or hot lines. Artificial intelligence should not be the only outlet.

5) Maintaining visible crisis contacts

Publishing numbers for hot lines and text lines where adolescents can see them. For example, in the United States, call or text 988 of the suicide life line and crises.

6) Watch the changes

Notice of transformations in mood, sleep or behavior. Be integrated with these signs with online patterns to watch the risks early.

Take my test: How safe is your safety online?

Do you think your devices and data are really protected? Take this fast test to see where your digital habits stand. From passwords to Wi-Fi settings, you will get details for what you do properly-and it needs to be improved. Take my test here: Cyberguy.com/quize

Kurt fast food

Openai’s plan to involve the police shows the urgent case. Artificial intelligence has the ability to communicate, but it also carries risks when teenagers use it in moments of despair. Parents, experts and companies must work together to create guarantees that save lives without sacrificing confidence.

Are you comfortable with artificial intelligence companies alerting the police if your teenager shares suicide ideas online? Let’s know through writing to us in Cyberguy.com/contact

Subscribe to the free Cyberguy report

Get my best technical advice, urgent safety alerts, and exclusive deals that are connected directly to your inbox. In addition, you will get immediate access to the ultimate survival guide – for free when you join my country Cyberguy.com/newsledter

Click here to download the Fox News app

Copyright 2025 Cyberguy.com. All rights reserved.

https://static.foxnews.com/foxnews.com/content/uploads/2025/08/open-ai-chatgpt-mental-health-guardrails.jpg

إرسال التعليق